Cognitive technologies could provide banks with new efficiencies, capabilities and innovations. But they could also cause a future crisis if not properly controlled. By Justin Pugsley.

What is happening?

There is much excitement surrounding artificial intelligence (AI) and machine learning, and the banking sector has definitely caught the bug (excuse the pun). Much like the UK’s Financial Conduct Authority, some banks hold their own ‘hackathons’ while many others are supporting AI start-ups.

There is no doubt that cognitive technologies are an exciting new frontier that could be a game-changer for banking, but bankers need to make sure these are transformative in a positive way. However, cognitive technologies have key issues that need addressing. For a start, they can be very complex, to the extent that even their creators don’t always entirely understand how they function (or think).

Sounds familiar? The 2007-09 global financial crisis was exacerbated by very complex derivatives products driven by ‘black boxes’ that few properly understood. There is another parallel to be drawn with the highly sophisticated internal models used by big banks.

This leads to another danger: like some of those complex derivatives, there is some uncertainty as to how cognitive technologies will behave, especially under unforeseen conditions. And given the potential for AI to become pervasive in banking this is worrying – especially when it comes to using unstructured data. If not properly controlled, this could lead to all kinds of unexpected and even dangerous outcomes as AI and machine-learning programs could draw erroneous conclusions from unrelated data sets.

Another factor is that with enough computing power, these programs could make important decisions at lightning speeds, long before human intervention has the chance to stop them doing serious damage.

Why is it happening?

Bankers see huge potential for cognitive technologies to help know their customers better and to combat money laundering and terror-related finance. But it goes beyond that – for example, powering chatbots, doing credit scoring, algorithmic trading, risk assessment, forecasting and driving capital efficiency and capital allocation.

These technologies could also cut many jobs to reduce costs and enhance the capabilities of the mundane programs that harvest vast amounts of data for risk analysis and regulatory purposes.

What do bankers say?

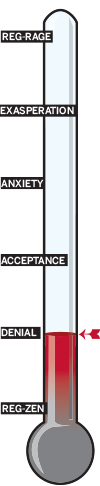

Amid all the excitement, bankers do not yet seem particularly concerned about the risks, though some compliance and risks departments are starting to pay attention. Admittedly, these technologies have neither been scaled up nor are they yet running major parts of a bank, but it may only be a matter of time before they do.

Many see cognitive technologies delivering a competitive edge along with that much prized (but usually lacking) quality of differentiation. Not only will they help create new, better targeted financial products, but they could improve product pricing by better identifying risks possibly in the blink of an eye. It is quite possible the big banks may one day engage in ‘algorithmic’ arms races to grab market share.

Unfortunately, it is clear that currently many compliance departments are not up to speed on AI – unsurprising as many staff have legal, rather than computer science, backgrounds. At a recent conference, one consultant related a story whereby someone on a front desk of a big bank wrote an algorithm in a programming language that only one other person in the bank understood: a clear control failure.

Will it provide the incentives?

Banks have a huge incentive to get ahead of the curve in controlling the cognitive technologies they deploy, given there is so much that could go wrong. Left unchecked, cognitive technologies have the potential to misallocate risk through to discriminating against certain demographics – thus leaving a bank exposed to systemic and reputational risk.

Banks must implement strict governance codes stipulating the use of cognitive technologies and be able to answer the questions: “What is the worst that can happen and how can it be stopped from happening?” This is an area where compliance and risk must be fully engaged and they need their own tech experts to ask the difficult questions. Otherwise, expect supervisors to be eventually doing that – along with lots of new prescriptive rules and fines.

Cognitive technologies need also to be governed by a sense of perspective and a ‘conscience’, which currently is best provided by humans. For derivatives and internal models, regulators have already demanded simplification, standardisation and robust controls, to the chagrin of many bankers who have seen profitability reduced.

Should banks show themselves unable to control complex ‘rogue’ algorithms, they could find that they will never again be trusted by society with highly complex, but profitable, products.